Yes, RAID 5 can lose 1 drive and still function, though with reduced redundancy. RAID 5 is a storage technology that uses distributed parity to provide fault tolerance and improve storage reliability.

What is RAID 5?

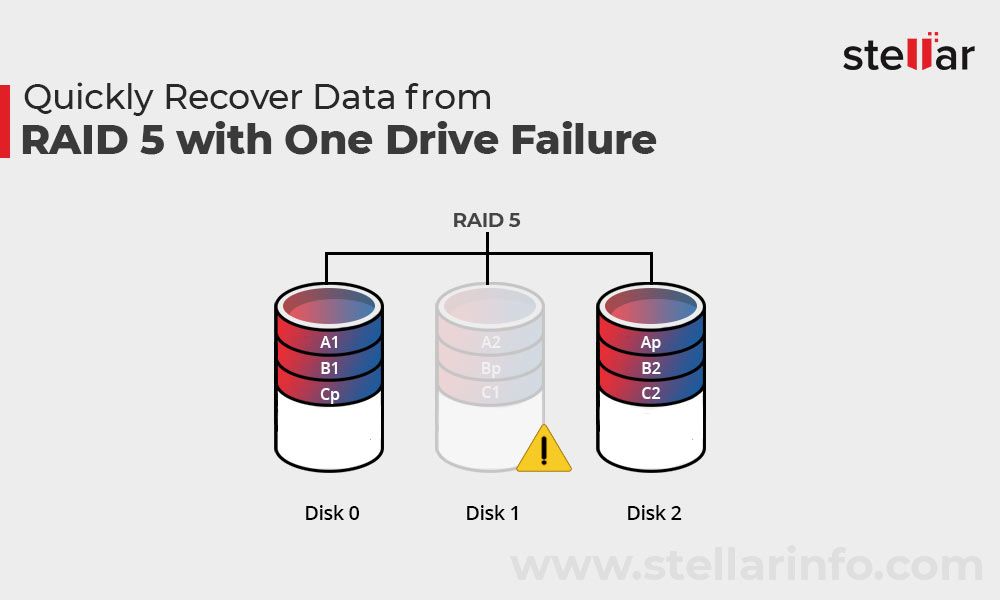

RAID 5 is a storage technology that stripes data across multiple disks and uses distributed parity to provide fault tolerance. Here are some key points about RAID 5:

- Data is striped across multiple disks in a RAID 5 array, similar to RAID 0.

- Parity information is distributed across all the disks and used to reconstruct data in case of disk failure.

- RAID 5 requires a minimum of 3 disks – data is striped across N-1 disks and parity is stored on the Nth disk.

- If one disk fails, its data can be reconstructed from the parity disk and remaining data disks.

- RAID 5 provides good performance for reads since data is striped, but writes are slower due to parity calculation.

In summary, RAID 5 provides fault tolerance by allowing recovery from a single disk failure. Data is striped for performance while parity enables redundancy.

What happens if a disk fails in RAID 5?

If a single disk fails in a RAID 5 array, the data on the failed disk can be reconstructed from the parity information and data on the remaining disks. Here is the recovery process:

- The RAID controller detects the disk failure through regular patrol reads.

- The failed disk is marked as failed and taken offline by the controller.

- When data is read from the failed disk, the RAID software calculates the data by XORing the parity drive data and the data from the remaining disks.

- This calculation process is transparent to the user and application.

- The RAID continues to operate in a degraded mode on N-1 disks until the failed disk is replaced.

- Once the failed disk is replaced, the data is rebuilt onto the new disk using the parity drive and remaining disk data.

So in summary, a single disk failure in RAID 5 does not result in any data loss. The array continues operating while transparently reconstructing the data from the failed drive using parity. However, the array is running with reduced redundancy until the failed drive is replaced.

What are the risks of running RAID 5 with a failed drive?

While RAID 5 can survive a single disk failure, running it long-term in a degraded state with a failed drive has some significant risks:

- No fault tolerance – With one disk already failed, the array has lost its redundancy. Any additional disk failure will lead to data loss.

- Increased disk workload – The remaining disks have to work harder to reconstruct the data from the failed drive, leading to performance impact.

- Unrecoverable read errors – Bad sectors or errors on the working disks can lead to data loss if parity data is also unavailable.

- Double disk failure – The probability of a second disk failing increases over time if the failed disk is not replaced quickly.

To mitigate these risks, it is crucial to replace failed disks promptly in a degraded RAID 5 array. The array should not be operated long-term in a degraded state, especially if the disks are old or have pending bad sectors.

How long can RAID 5 run with a failed drive?

There are no specific guidelines on how long RAID 5 can safely run with a failed drive. It depends on factors like:

- Quality and age of the remaining disks

- Workload and disk utilization

- Temperature and operating environment

- Vibration and handling of the storage enclosure

As a general rule of thumb, here are some recommendations on replacement timelines:

| Disk Condition | Replacement Timeline |

|---|---|

| New enterprise SATA or SAS disks | Up to 1 week |

| Older SATA disks (3-5 years) | 2-3 days |

| Old enterprise SAS disks | 3-5 days |

| Disks with pending/relocated sectors | 24 hours max |

As a best practice, enterprises often aim to replace failed disks within 24-48 hours if spare disks are readily available onsite. For older disks or arrays running in poor environmental conditions, failed disks should be treated as an emergency and replaced ASAP.

Can RAID 5 be run permanently in degraded mode?

It is not recommended to run RAID 5 permanently in a degraded state on N-1 disks. While the array will technically continue to function, there are significant downsides:

- No fault tolerance – risk of data loss with another disk failure.

- Performance impact – decreased throughput due to overhead of reconstructing missing data.

- Increased disk stress – remaining disks have to work harder.

- Difficult to maintain – juggling spare disks becomes challenging.

- Complicates support – troubleshooting issues is more difficult.

The benefits of decreased storage capacity and cost savings are generally not worth the risk and complexity of permanently running RAID 5 in a degraded state. Exceptions could be made for cold storage or archival data that sees infrequent access and where some data loss can be tolerated.

Should RAID 5 arrays be rebuilt after disk replacement?

Yes, it is crucial to rebuild a RAID 5 array after replacing a failed disk. The rebuild process restores full redundancy and prevents data loss in case of another disk failure. Here is what happens during a RAID 5 rebuild:

- The new replacement disk is inserted and identified by the RAID controller.

- The controller begins writing data to the new disk using parity data and data from the remaining disks.

- The rebuild priority can be adjusted based on the workload – high impact or low impact.

- The rebuild completes when all data and parity has been restored to the new disk.

- The array goes back to normal operating mode with full redundancy.

Not performing the rebuild leaves the array in a degraded state and vulnerable to data loss. The performance penalties and disk stress also persist until the rebuild is completed. However, the rebuild process does introduce additional load, so timing it appropriately is recommended.

How long does it take to rebuild a RAID 5 array?

The RAID 5 rebuild time depends on several factors:

- Disk capacity – Higher capacity disks take longer to rebuild.

- Number of disks – More disks means more data to rebuild.

- Rebuild priority – Low impact rebuilds are slower but high impact affects production I/O.

- Disk speed – Faster drives can rebuild faster.

- Activity level – Rebuild times increase if array is busy with production workloads.

As a general estimate, the rebuild time can be calculated as:

Rebuild time = (Disk capacity / Rebuild speed) * Number of disks

For example, rebuilding a 6x4TB RAID 5 array with sustained rebuild speeds of 100MB/s will take approximately:

(4TB / 100MB/s) * 6 = 24,000 seconds = 6 hours 40 minutes

Actual rebuild times may vary. For mission critical systems, redundant standby arrays allow rapid rebuilds from backups avoiding long rebuild times.

Can a RAID 5 rebuild be paused and resumed?

Yes, most RAID controllers allow pausing and resuming a RAID 5 rebuild process. This can be useful when:

- Rebuild is slowing production workloads and needs to be paused.

- Maintenance tasks require shutting down the storage system.

- Rebuild needs to be throttled during peak activity hours.

When the rebuild is paused, the process stops writing data to the new drive. When resumed, it continues from where it left off. However, pausing too frequently can prolong the rebuild time and exposure to data loss.

The rebuild pause/resume feature requires controller support and may not be available on some older RAID cards. The rebuild state is persisted in the controller cache or battery-backed RAM during pauses.

Can a new RAID 5 array be created without initializing disks?

It is generally not recommended to create a RAID 5 array without initializing the member disks first. Here are some considerations:

- Initializing disks identifies bad sectors and remaps them before use.

- Initializing erases existing data and encryption if disks were previously used.

- Skipping initialization could cause data corruption if bad areas are not mapped out.

- Unaligned partitions or existing data can degrade RAID performance.

- Most RAID controllers require a manual override to skip initialization.

However, initialization can be skipped if the disks:

- Are new with no existing data or partitions.

- Had bad blocks remapped by the manufacturer.

- Will only be used for temporary cache or scratch space.

In summary, while possible, it is not normally recommended to create production RAID 5 arrays without initializing disks first. The risks of data corruption generally outweigh the time savings.

Should RAID 5 disk order be identical when replacing multiple disks?

When replacing multiple failed disks in RAID 5, it is generally recommended to follow the same physical disk order if space allows. Here are some benefits of keeping the original disk order:

- Simplifies administration of spare disks and slots.

- Avoids rebuild contention if both disks fail in the same stripe.

- May improve performance by maintaining original stripes.

- Requires no rearrangement of existing disk data.

However, maintaining the original disk order is not strictly required, and there are cases where it may be changed:

- Insufficient free slots to insert disks in the same locations.

- Transitioning from old disks to new larger capacity disks.

- Reconfiguring layout from hardware to software RAID.

As long as the RAID controller is notified of the disk layout change, altering the disk order does not impact data integrity. But performance may suffer until data is fully rebuilt and restriped.

Can RAID 5 disk sizes be mismatched?

RAID 5 does allow disks of varying sizes to be used together, however this comes with some caveats:

- Usable capacity will be limited to the size of the smallest disk.

- Larger disks will have unused space that remains inaccessible.

- Performance may suffer due to misaligned stripes and complicated management.

- Rebuilds take longer as ALL disk space has to be rewritten.

- Not recommended for new deployments, only for replacing old disks.

While possible, mismatched disks in RAID 5 introduce complexity that makes features like capacity expansion and migration harder. For new arrays, matching disks is highly recommended.

That said, incrementally replacing old small disks with larger new ones in an existing array can be used as a transition strategy. But the bulk of the benefits come after fully replacing all the disks.

Should RAID 5 or RAID 6 be used for better redundancy?

RAID 6 offers better redundancy compared to RAID 5 and is recommended for arrays using large capacity disks or containing critical data. Here is a comparison:

| RAID 5 | RAID 6 | |

|---|---|---|

| Redundant disks | 1 parity disk | 2 parity disks |

| Fault tolerance | 1 disk failure | 2 disk failures |

| Raw capacity | N-1 disks | N-2 disks |

| Rebuild times | Faster | Slower |

| Minimum disks | 3 | 4 |

The dual parity in RAID 6 provides significantly better protection against multi-disk failures. But RAID 6 does have higher capacity overhead and slower rebuilds. In general, RAID 6 is recommended for:

- Disks larger than 2TB due to long rebuild risks.

- Arrays with 10+ disks where multi-disk failures are more likely.

- Situations where downtime costs outweigh capacity concerns.

Whereas RAID 5 may still be suitable for smaller arrays and non-critical data where performance and capacity are bigger concerns.

Conclusion

In summary, RAID 5 can survive a single disk failure but it is not recommended to run it long-term in a degraded state. The failed disk should be replaced promptly to restore redundancy and prevent data loss from additional failures. When sizing new storage, RAID 6 offers better protection for large drive capacities and mission critical data. But RAID 5 remains viable for smaller, less critical arrays where capacity and performance matter more.