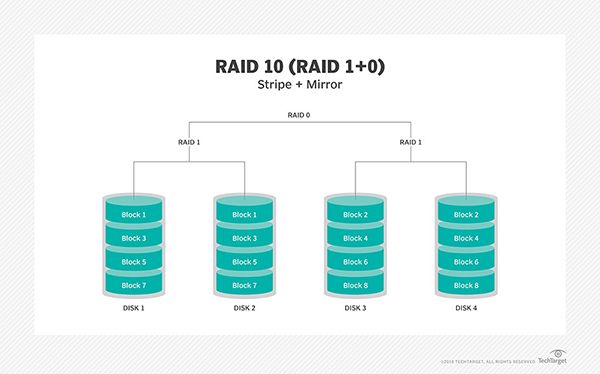

RAID 10, also known as RAID 1+0, is a hybrid RAID configuration that combines disk mirroring and disk striping to provide fault tolerance and improved performance. In an 8 drive RAID 10 array, the drives are configured as 4 sets of mirrored pairs. This means there are 4 RAID 1 arrays that are then striped together in a RAID 0 configuration. The key benefit of RAID 10 is that it can withstand multiple drive failures, as long as no more than 1 drive fails per mirrored set.

RAID 10 Overview

RAID 10 requires an even number of drives, as drives are mirrored in pairs. Data is written in stripes across the RAID 0 arrays, while each of the underlying RAID 1 arrays provides fault tolerance through mirroring. This results in increased performance compared to a pure RAID 1 configuration, as reads and writes are load balanced across multiple spindles. Writes are duplicated to both drives in each mirrored set.

In an 8 drive RAID 10 configuration, the total storage capacity is equal to the capacity of 4 drives. Each RAID 1 array contains 2 drives, so the total capacity is 50% of the total number of drives. For example, with 8 x 2TB drives, the total usable capacity in RAID 10 would be 8TB.

Drive Failure Tolerance

The drive failure tolerance of RAID 10 depends on which drives fail. Since data is mirrored between each drive pair, RAID 10 can withstand a single drive failure per mirrored set without data loss. However, a second drive failure in the same mirrored set would cause data loss.

In an 8 drive RAID 10 array made up of 4 RAID 1 arrays, up to 4 drives can fail, as long as no more than 1 drive fails per RAID 1 set. For example, if drives 1, 3, 5, and 7 were to fail, the array would continue functioning normally. However, if drives 1 and 2 were to fail, data would be lost since this would break the RAID 1 mirror.

Rebuilding After Drive Failure

When a single drive fails in a mirrored set, the RAID controller will switch the set to a degraded mode, running on the remaining good drive. Data continues to be accessible during this time. The failed drive will need to be replaced and the mirror rebuilt to restore full redundancy.

The rebuild process copies data from the good drive over to the replacement drive, synchronizing the mirror. Rebuild times depend on the storage capacity and performance of the drives. With large high capacity drives, rebuilds can take many hours to complete.

During the rebuild, the RAID 10 array is vulnerable to a second drive failure. If a second drive fails in the same mirrored set before the rebuild completes, data will be lost. To minimize this risk, failed drives should be replaced as soon as possible.

Example Drive Failure Scenarios

Here are some examples of drive failure scenarios in an 8 drive RAID 10 array and how many drive failures would cause data loss:

Scenario 1: Drives 1 and 2 Fail

Drives 1 and 2 are part of RAID 1 mirror set 1. With both drives failed, this RAID 1 set is broken and data is lost on these drives. However, the other 3 RAID 1 sets would continue functioning normally. Overall the RAID 10 array would survive this double drive failure.

Scenario 2: Drives 1, 3, 5, and 7 Fail

In this scenario, one drive fails in each of the 4 RAID 1 sets. Although 4 drives have failed overall, no single RAID 1 set lost both of its drives. So no data would be lost and the RAID 10 array would continue functioning normally.

Scenario 3: Drives 1, 2, 5, and 6 Fail

Here both drives failed in RAID 1 set 1, and both drives failed in RAID 1 set 2. With two mirrored sets broken, data loss would occur for the data stored on those 4 drives. However, the array could continue functioning using the 2 remaining RAID 1 sets made up of drives 3/4 and 7/8.

Optimal RAID 10 Layout

With RAID 10, the layout of the RAID 1 mirrored sets across physical drives can impact performance and fault tolerance. There are two main layout methods:

RAID 10 Layout Methods

- Mirroring across controllers (two drive mirrors) – Each mirrored drive pair resides on separate controllers. Provides fault tolerance if a storage controller fails.

- Mirroring within controllers (four drive mirrors) – Each mirrored drive pair resides on the same controller. Optimizes performance by localizing I/O to a single controller.

In smaller arrays like 8 drives, mirroring across controllers provides the highest fault tolerance by protecting against a controller failure. But with larger arrays, four drive mirrors optimize performance since I/O is handled locally.

RAID 10 also allows the stripe size to be configured, defining the amount of contiguous data written to each drive. A larger stripe size can improve sequential I/O performance. Typical stripe size values are 64KB, 128KB, 256KB, or 512KB.

RAID Controller Cache

The cache on the storage controller can also impact RAID 10 performance. A battery-backed write cache helps absorb writes and optimize drive I/O. Solid state drives (SSDs) are sometimes used as read caches to improve read performance.

When to Use RAID 10

RAID 10 provides a good blend of performance and fault tolerance for mission critical applications that require high availability. The performance benefits versus RAID 1 makes it well suited for transactional workloads that require low latency random I/O.

Some examples of workloads where RAID 10 can be beneficial include:

- Database servers

- Virtualization and VDI environments

- Busy websites and web applications

- High performance computing

- Video editing and media streaming

The downside is the overall storage efficiency, since available capacity is reduced by half compared to a non-mirrored configuration. RAID 10 works best for applications that need performance with an even number of drives.

Alternatives to RAID 10

Other RAID levels to consider that provide fault tolerance without quite as much capacity loss include:

RAID 6

RAID 6 uses dual parity to protect against two drive failures. It provides fault tolerance without needing to mirror drives. Capacity is reduced by two drives for parity. RAID 6 is ideal for large arrays when RAID 10 would have prohibitive capacity loss.

RAID 50

RAID 50 combines disk striping with distributed parity, similar to RAID 5. It provides fault tolerance for larger arrays with less capacity loss than RAID 10, but also lower performance.

RAID 60

RAID 60 combines the straight block-level striping of RAID 0 with the distributed double parity of RAID 6. It requires at least 8 drives and provides fault tolerance for up to two failures in each RAID 6 set.

Conclusion

To summarize, in an 8 drive RAID 10 array made up of 4 RAID 1 mirror sets:

- Total data storage capacity is equal to 4 drives.

- Up to 4 drives can fail without data loss, as long as no more than 1 drive fails per RAID 1 set.

- To maximize fault tolerance, mirrors should be distributed across controllers.

- Larger stripe sizes and controller caches help optimize performance.

- RAID 10 is ideal for low latency transactional workloads that require redundancy.

The balanced performance and redundancy of RAID 10 helps support mission critical systems and applications that demand high availability and uptime. Just remember that the storage efficiency is low, so capacity requirements should be considered when deciding between RAID 10 and other redundant RAID levels.