Quick Answer

No, the hard disk is not directly accessed by the CPU in modern computer systems. The hard disk is connected to the motherboard via an interface like SATA, SAS, or NVMe. Data is transferred between the disk and CPU through a storage controller, such as SATA controller, which handles the underlying communication protocols. The storage controller acts as an intermediary, providing an abstraction layer between the disk hardware and the operating system.

In a computer system, the central processing unit or CPU is considered the brain of the system. It executes program instructions and processes data. However, the CPU does not directly interact with most peripherals and hardware components. There are intermediary components that facilitate communication between the CPU and other devices.

One common question is whether the hard disk drive is directly accessed by the CPU or not. The hard disk drive is the primary long-term storage device in a computer and stores the operating system, applications, and data files. The contents of the disk must be available to the CPU for processing and execution. However, there are several steps involved in retrieving data from the disk and making it available to the CPU.

In this article, we will provide an in-depth look at how the hard disk drive communicates with the CPU and is accessed in a modern computer system. We will cover:

– Role of the hard disk drive

– Disk interfaces like SATA, SAS, NVMe

– Storage controllers

– Data transfer process from disk to CPU

– Abstraction between hardware and operating system

– Caching and buffered access of hard disk data

– Direct memory access (DMA)

Understanding these concepts will make it clear how the CPU interacts with storage devices like hard disks indirectly through intermediary components.

Role of Hard Disk Drive

The primary permanent storage device in a computer is the hard disk drive. It provides non-volatile storage, meaning it retains data even when powered off. Some key responsibilities of the hard disk include:

– Storing operating system files like Windows, Linux, etc.

– Storing applications, games, software

– Storing user files and documents

– Caching and buffering data for faster access

– Providing virtual memory and paging files for RAM

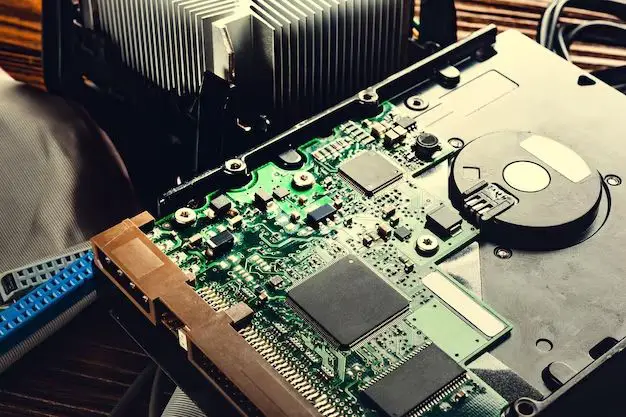

The hard disk drive consists of one or more platters with magnetic coating that store data in the form of bits. Read/write heads are used to magnetize and access data stored on the disk platters.

The data storage capacity of hard disks has grown enormously over the years. Current consumer hard drives can store up to terabytes of data while enterprise drives go up to tens of terabytes.

This high-capacity non-volatile storage makes hard disk essential for long-term data storage. The CPU depends on the hard disk to provide secondary storage for programs and data required for processing.

Hard Disk Interfaces

There are different interfaces used to connect the hard disk drive to the computer system. Some common ones include:

SATA

SATA or Serial ATA is the most common hard disk interface found in modern desktop PCs and laptops. SATA was introduced as a replacement for the older PATA (Parallel ATA) standard and provides point-to-point serial connections between devices.

SATA connectors transfer data serially over a common pair of conductors. This allows for thinner and more flexible cables with smaller connectors compared to PATA ribbons. SATA host adapters and devices communicate through a layered protocol referred to as the SATA protocol stack.

Common SATA interface speeds include 1.5Gbps, 3Gbps, 6Gbps, 12Gbps, and 16Gbps. The theoretical maximum bandwidth goes up to 16Gbps but mechanical limitations of hard disks prevent reaching those speeds.

SAS

SAS or Serial Attached SCSI is an enterprise-level disk interface optimized for servers, workstations, and RAID configurations. It provides significantly higher speeds compared to SATA and supports advanced SCSI capabilities.

The SAS interface uses the standard SCSI command set allowing compatibility with SATA. But it also supports features like hardware RAID, dual-port access to drives, and higher reliability. SAS controllers can connect both SAS and SATA devices.

Typical SAS speeds range from 3Gbps to 24Gbps per channel. The high-speed dual-ported access and advanced capabilities make SAS suitable for enterprise storage systems.

NVMe

NVMe or Non-Volatile Memory Express is a high-performance storage protocol designed to leverage the fast access speeds of solid-state drives and PCI Express interface. NVMe SSDs communicate directly with the CPU through PCIe lanes which provides massive parallelism and throughput.

Compared to protocols like SATA and SAS that were designed for mechanical hard disks, NVMe reduces latency and overhead. It takes advantage of the non-volatile memory in SSDs through streamlined queuing and commands. NVMe SSDs can reach sequential read/write speeds up to 3500 MBps.

NVMe is suited for high-performance computing, virtualization, databases, and intensive workstation applications that demand low latency. However, NVMe SSDs have higher costs compared to SATA SSDs.

Storage Controllers

While those are common hard disk interfaces, the storage device itself does not directly connect to the CPU or motherboard. There is an intermediary component called the storage controller or host bus adapter (HBA).

Some key responsibilities of storage controllers include:

– Implementing disk interface protocols like SATA, SAS, NVMe

– Managing data transfers between system memory and storage devices

– Providing access to storage from operating system

– Implementing RAID capabilities

– Providing interconnect features like port multipliers and expander

– Providing queueing mechanisms and buffer memory

The storage controller handles all the low-level aspects of interfacing with storage devices and makes the storage available to the operating system in a standardized way. For example, SATA controllers implement functionality like Native Command Queuing, port selectors, and FIS communication defined by the SATA protocol specification.

Storage controllers are integrated into the motherboard chipset in consumer grade systems. For example, modern Intel platforms have SATA controllers built into the PCH or Platform Controller Hub. Servers feature more advanced controller cards like SAS/RAID cards to enable enterprise capabilities. PCIe NVMe controllers are used for high-speed solid-state storage access.

In this manner, the hard disk drive connects to and communicates with the storage controller, not directly with the CPU. The controller acts as the interface between the raw storage device and the operating system.

Data Transfer from Disk to CPU

Now that we have seen how hard disks interface to the system using storage controllers, let’s look at the actual flow of data from the disk to the CPU:

1. The operating system issues a request for reading data from the hard disk, specifying the read location and number of bytes. This request goes to the storage controller.

2. The controller interprets the OS request and translates it into actual SATA, SAS or NVMe commands that the hard disk understands.

3. The read command is communicated to the hard disk via the interconnect protocol.

4. The hard disk locates the requested data on the platters and sends it serially to the controller via the interface protocol.

5. The controller stores the data in its buffer memory and notifies the operating system that the data is available.

6. The OS copies the data from the controller buffer into the system RAM.

7. The CPU can now access the requested data from RAM and process it.

This sequence demonstrates how the hard disk does not directly interface with the CPU. The storage controller acts as the intermediary without which the SATA or SAS hard disk cannot communicate with the CPU and RAM directly.

The controller handling the intricate low-level storage access protocols allows the operating system to simply send basic read/write logical block requests. The controller translates them into corresponding SATA/SAS commands and manages the data transfer without direct CPU involvement.

Abstraction between Hardware and Software

An important outcome of this separation between the storage device and the CPU is abstraction. The storage controller provides an abstraction layer that hides the complexity of interfaces like SATA, SAS or NVMe from the operating system.

Due to this, the OS is not concerned about the intricate technical protocols used by the underlying device-level interface. The OS simply interfaces logically with uniform block storage without worrying about the type of physical storage device being used.

This abstraction enables using different types of hard disk interfaces and controllers transparently under the same OS. The OS is shielded from the changes in physical storage hardware thanks to the abstraction provided by storage controllers.

For example, a SATA hard disk can be replaced seamlessly with a SAS hard disk without any changes needed from the OS perspective. The SAS controller will take care of communicating appropriately with the SAS drive even though the OS still sees it as generic block storage.

Caching and Buffered Access

Storage controllers also improve disk access performance by incorporating memory and caching capabilities. When data is read from disk, it is stored in the fast buffer memory of the controller whenever feasible. This avoids the need to access the mechanical hard disk again to retrieve the same data.

Controllers may also implement disk caching algorithms, like least recently used (LRU), to cache frequently accessed data. Read-ahead algorithms are used to predictively read data from disk and buffer it for near future requests.

Caching and buffering enable the controller to service many read requests directly from its memory without having to incur the latency of hard disk access every time. This significantly improves overall storage performance.

Instead of direct raw access to the hard disk, the OS receives cached buffered access transparently. The buffering and caching optimizations on the controller are hidden from the operating system due to the abstraction layer.

Direct Memory Access (DMA)

For fast data transfers between storage and memory, controllers use a capability called Direct Memory Access (DMA). DMA enables the controller to directly access system RAM to transfer data without continuous involvement from the CPU.

The CPU initializes the DMA transfer by providing memory pointers and data length to the controller. The controller can then directly place data into memory without needing to copy via intermediate CPU registers.

DMA thereby reduces CPU overhead and speeds up data transfers between storage and memory. The CPU is freed up for other processing while the controller independently handles data transfers through DMA.

By incorporating DMA and avoiding slow CPU copy methods, storage controllers minimize data transfer latency between storage media and memory. This again is hidden from the operating system through abstraction.

Conclusion

In conclusion, the hard disk drive is not directly accessed by the CPU in modern computer systems. There are several intermediary components and processes involved between the storage media itself and the CPU accessing data from it:

– Hard disks use dedicated interfaces like SATA, SAS, NVMe to connect to the motherboard through storage controllers.

– Storage controllers implement the protocol specifics of device interfaces and facilitate data transfers.

– Controllers provide an abstraction layer that hides physical storage details from the operating system.

– Caching, buffering, and DMA capabilities in controllers improve storage access performance.

– The operating system simply sees generic logical block storage without concern for the underlying physical storage hardware.

So while the hard disk stores the data that the CPU needs to process and execute, the access is not direct. Storage controllers act as intermediaries between the disk and the CPU to handle communication protocols, data transfers, caching, abstraction, and other responsibilities. This separation leads to modular, high-performance storage subsystems that can evolve independently of CPUs and operating systems.