In computer architecture, cache memory is used to reduce the time needed to access data from main memory. There are typically multiple levels of cache, with the lowest level (Level 1 or L1 cache) being the fastest but smallest in size, while higher levels like Level 3 (L3 cache) are larger but slower than the lower levels. But is Level 3 cache actually faster than Level 1 cache? Let’s take a closer look at how cache memory works to find out.

What is Cache Memory?

Cache memory is a smaller, faster memory that sits between the CPU and main memory. It stores frequently accessed data from main memory to reduce the average time to access memory. Cache memory exploits locality of reference – the tendency for programs to access the same data or instructions multiple times in a short period of time. By having frequently accessed data in a small, fast cache near the CPU, overall memory latency is reduced.

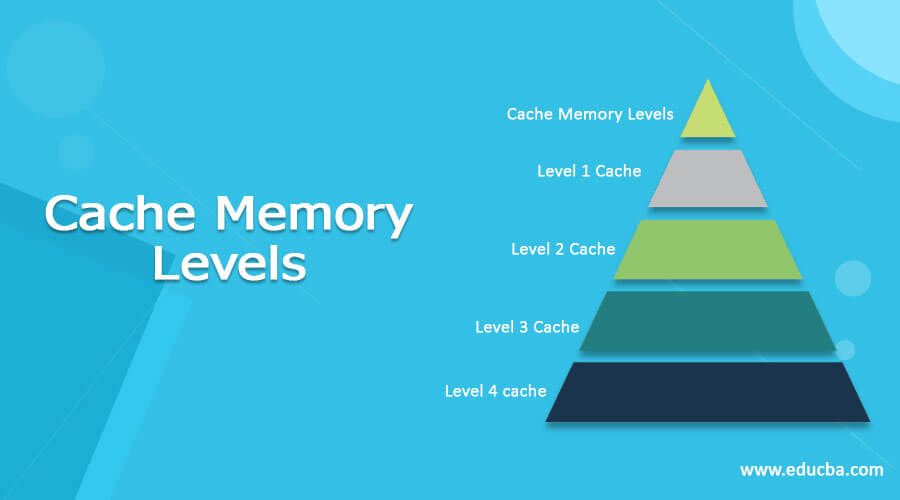

There are typically 3 levels of cache in modern computer systems:

Level 1 (L1) Cache

L1 cache is the smallest and fastest cache memory, ranging from 8KB to 64KB in size. It sits directly next to the CPU on the processor chip itself, providing the lowest latency and highest bandwidth. L1 cache is split into separate instruction and data caches. It uses static RAM (SRAM) which is fast but expensive.

Level 2 (L2) Cache

L2 cache is larger, from 128KB to several MB in size, and has longer latency than L1. It is on-chip for the CPU but separate from the cores themselves. L2 cache is unified, storing both instructions and data. It also uses fast SRAM memory.

Level 3 (L3) Cache

L3 cache is the largest and slowest cache, from several MB up to tens of MB in modern processors. It is off-chip, residing on a separate die on the CPU package. L3 latency is much higher than L1/L2 but lower than main memory. L3 uses cheaper, slower DRAM instead of SRAM. It acts as a victim cache, storing data evicted from L2.

Cache Memory Hierarchy

Cache memory forms a hierarchy, with the smallest, fastest caches closest to the CPU cores. When the CPU needs to read or write data, it first checks the L1 cache. If the data is not found in L1 (a cache miss), it looks in the larger L2 cache, then L3 and finally main memory. Likewise, when data is written, dirty cache lines are written back to lower levels of cache and ultimately to main memory.

This hierarchy exploits locality to reduce average memory access time. Recently used data migrates up the hierarchy, while unused data is evicted down the levels. Larger caches have higher hit rates, offsetting their longer latencies. The table below shows typical cache sizes and access times:

| Cache Level | Typical Size | Access Time |

|---|---|---|

| L1 | 32KB | 1 – 2 cycles |

| L2 | 256KB | 10 – 20 cycles |

| L3 | 8MB | 40 – 60 cycles |

As can be seen, L1 has the smallest size but lowest latency of just 1-2 cycles. In contrast, L3 has a much larger size, but latency around 40-60 cycles, over 20x higher than L1.

Is L3 Faster than L1?

Given the large difference in access times, it may seem clear that L1 cache must be faster than L3 cache. However, there are some considerations that make the performance comparison more complex:

Hit Rate

The most important factor is the hit rate – the percentage of accesses that find the requested data in cache. A cache with a higher hit rate will have better average performance despite higher latency on a miss. L3 cache typically has a hit rate from 90-97%, much higher than the 40-60% hit rate of L1 cache. This means L3 does not have to go to main memory for data nearly as often as L1.

Bandwidth

L3 cache has much higher bandwidth to main memory compared to L1, with wider bus widths of 64-256 bits vs just 32-64 bits for L1. This means it can load data from memory faster when it does miss. L3 is also shared by all CPU cores, allowing aggregate transfers across cores.

Prefetching

L3 cache has more sophisticated prefetchers that can anticipate future accesses and speculatively load data. This helps hide its naturally higher latency. L1 relies more on demand fetches when cache misses occur.

Victim Cache

Since L3 acts as a victim cache for data evicted from L2, it has access to data no longer present in L1/L2. The CPU may then access data directly from L3 without having to fetch from slower main memory.

Access Patterns

Memory access patterns play a key role too. L1 has faster access to recently used data. But for large datasets or random accesses, L3 has performance advantages. Its large capacity and prefetching improve spatial and temporal locality.

When is L3 Faster than L1?

Taking these factors together, L3 cache generally outperforms L1 cache in the following cases:

Accessing Large Data Sets

When operating on large data sets that thrash the L1 cache, L3’s higher hit rate and prefetching improve performance. L1 constantly misses, fetching from L2 and L3 instead.

Streaming/Sequential Access

For streaming workloads with sequential access, L3 prefetchers can load data ahead of use. L1 relies on demand fetches when each miss occurs.

Random Memory Access

With random accesses that defeat locality, L3’s higher hit rate reduces accesses to main memory vs constant L1 misses.

Shared Data

For data shared across CPU cores, L3’s unified design and wide bandwidth provide higher performance than core-local L1 caches.

Victim Cache Hits

When data evicted from L1/L2 and placed in L3 victim cache is reused, L3 provides lower latency than fetching it again from memory.

When is L1 Faster than L3?

However, L1 cache still provides lower latency than L3 in these cases:

Recently Used Data

For data reused within a short time period, L1’s locality advantages keep the working set closer to the CPU cores.

Instruction Cache

The L1 instruction cache provides extremely low latency access to recently executed code segments. L3 cannot match this performance.

Small Data Sets

For small data sets that fit entirely within L1, it avoids L3 lookups and hits in just 1-2 cycles.

Latency-Sensitive Code

Some algorithms are highly sensitive to latency. L1’s nanosecond access times minimize delays vs L3’s tens of nanoseconds latency.

Highly Localized Access

Code that only operates on local data within a core achieves the best performance from L1. Shared L3 cache is slower.

Optimizing for L1 vs L3

To optimize performance, programmers should structure algorithms and data layouts to best leverage L1 and L3’s relative strengths:

Optimize L1 Use

– Increase data reuse within loops and functions to exploit L1 locality.

– Declare frequently accessed variables as registers instead of memory.

– Use small, contiguous data structures that fit in L1.

– Partition data into core-local chunks that don’t thrash L1.

Optimize L3 Use

– Use larger data structures that exceed L1 capacity.

– Access data sequentially or via stride patterns to leverage L3 prefetching.

– Share read-only data between threads to benefit from shared L3.

– Increase parallelism to maximize aggregate L3 bandwidth.

Carefully choosing data access patterns allows maximizing performance from both L1 and L3 caches.

Conclusion

In summary, L3 cache memory is not always faster than L1 cache due to its higher latency. However, for many workloads, L3 can outperform L1 cache due to its larger size, higher hit rate, prefetching, and bandwidth. Performance depends heavily on the access pattern – L1 is faster for localized data reuse and latency-sensitive code, while L3 excels at streaming, large data sets, and random memory access. By optimizing algorithms to use both L1 and L3 appropriately, overall memory performance can be maximized. The CPU cache hierarchy provides great flexibility in system design once understood.