It’s a common experience when saving files to notice that the size on disk is bigger than the actual file size. For example, you may save a 10 MB file, but then see that it takes up 12 MB of space on your hard drive. What causes this disparity between the actual and on-disk sizes? There are several factors that contribute to the on-disk size being larger than the true file size.

Operating System Overhead

One major reason is operating system overhead. When you save a file, the operating system doesn’t store it as one continuous set of bytes. Instead, it breaks the file up into chunks and stores each chunk in a separate location on the hard drive. This allows for more efficient storage and retrieval of data. However, it also means that extra space is needed to keep track of where those chunks are stored. File systems like NTFS and HFS+ use sophisticated data structures called metadata to catalog all the pieces of each file.

This metadata isn’t included in the stated file size, but does take up space on disk. It contains information like the file name, timestamp, ownership and permissions, as well as the addresses of each chunk of data. So even if the contents of your file are only 10 MB, the metadata required to manage it might take up an extra 2 MB.

Cluster Size

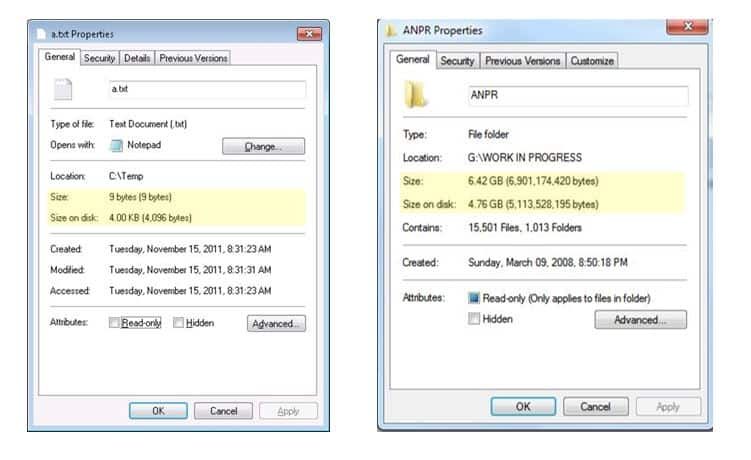

In addition to metadata, hard drives allocate space in chunks called clusters or allocation units. A cluster is the smallest amount of disk space that can be allocated to hold a file. For example, a disk using 4 KB clusters would reserve 4 KB of space for every file, even if the actual file size is smaller. A 10 MB file on such a disk would take up at least 10,240 KB on disk.

This wasted space from allocating full clusters is called internal fragmentation. The smaller the cluster size, the less space is lost to internal fragmentation. But cluster sizes have lower limits based on hardware factors like the disk controller’s addressing. Typical cluster sizes range from 4 KB to 64 KB. With small files, a significant amount of space can be lost solely due to the cluster size.

File System Structures

The very structures used by file systems also require extra space. For example, the file allocation table (FAT) in FAT32 and exFAT file systems stores information about each cluster on disk. As the number of clusters increases, the FAT itself takes up more space. The amount of overhead needed for the file system structures is generally proportional to the disk size – a larger disk means a larger FAT and more metadata.

Block Padding

Another factor is block padding to meet sector size requirements. Hard disks read and write data in blocks of 512 bytes called sectors. The operating system generally requires file data to be stored in whole sectors. If the last chunk of a file doesn’t fill up a sector, padding bytes will be added to meet the sector capacity. This padding accounts for up to 512 wasted bytes per file.

File System Journaling

Journaled file systems like NTFS and HFS+ use an extra data structure called a journal to enhance reliability. They require changes to metadata to be written sequentially to this journal before the main file system structures are updated. The journal takes up additional disk space but allows for safer recovery in case of power loss or system crashes. It adds redundancy to metadata so the file system can restore consistency.

Compression

Interestingly, compression can also lead to on-disk sizes being larger than uncompressed files. Some types of compression, like JPEG for images, can greatly reduce file sizes. However, data compressed using a format like ZIP is often not much smaller than the original. The compression process itself adds extra data like headers and indexes. If compression doesn’t significantly shrink a file, the end result stored on disk can be slightly larger than the original uncompressed data.

Security Copies

Some file systems make copies of files or metadata for added redundancy. NTFS uses advanced data structures called B-trees to organize metadata for efficiency. By default it stores two copies of the metadata B-trees to prevent corruption. This doubles the size of metadata on disk. The ZFS file system also generates extra copies of files to aid error recovery, which takes up more space.

Snapshots

One NTFS feature that can noticeably increase file sizes on disk is volume shadow copying. This allows snapshot backups of files to be accessible from within Windows. Each snapshot copy takes up as much space as the original files changed since the last snapshot. So if you have 100 GB of data and 10% changes between shadow copies, the total disk space used will be 110 GB. Other file systems like Btrfs and ZFS also support space-intensive snapshot capabilities.

Sparse Files

One mitigation technique used by modern file systems is support for sparse files. These are files that have empty gaps within them, which contain data blocks that only have zeros. The file system can avoid allocating space for these data blocks. The actual size of a sparse file on disk is only the size of the non-empty portions. This greatly reduces wasted space for files that have large empty regions, like virtual hard disk images.

Conclusion

In summary, the extra space used by files on disk is attributed to:

- File system metadata like FATs and B-trees

- Cluster size and internal fragmentation

- Block padding to match sector sizes

- Redundant data structures for reliability

- Potential compression overhead

- Snapshot copies of files

The good news is newer file systems employ techniques like sparse files and smaller cluster sizes to minimize these forms of overhead. But some level of extra space usage is inevitable to enable reliable storage and performance. The space on disk will virtually always exceed the stated file sizes.

Frequently Asked Questions

Why does my 16 GB hard drive show less than 16 GB capacity?

Out of the box, your hard drive’s total capacity will be lower than the advertised size. A portion of the disk space is reserved for internal use by the file system. For example, NTFS reserves 12.5% by default for metadata, the FAT, and other system files. A 16 GB drive may format to only 14 GB available in Windows. This reserved space ensures reliable file operations.

Why do large video files take up more space than their properties show?

Video files are particularly prone to extra on-disk size due to padding and compression overhead. Video codecs like H.264 break the data into chunks called GOPs. If the last GOP doesn’t fill up the sector, padding is added. Also, the compression headers and indexes can represent a measurable portion of the total size.

Why does copying a file take up double the space temporarily?

When copying or moving files on the same volume, the data is first written to a new location before the original is deleted. So temporarily both the source and destination occupy space until the operation completes. The extra space equals the file size. For large transfers, ensure adequate free space exists on the disk to avoid out of space errors.

How can I reduce the amount of wasted space on my hard drive?

Using a file system with small cluster sizes like 4 KB can minimize internal fragmentation. Disabling file compression avoids adding compression overhead. If supported, enable sparse file support. And reduce unnecessary snapshots or disable file system journaling, if reliability isn’t critical.

Impact of High Disk Usage

While the extra space used by files doesn’t directly cause problems, high disk usage from any source can impact performance and reliability:

Slower file access and transfer times:

When disks fill up, more time is needed to locate free space for writes. File fragments also get more scattered, increasing seek times. This causes noticeable lags when saving and opening files.

Faster wear on SSDs:

Solid state drives have a finite number of write cycles before cells wear out. High utilization and fragmented data means SSDs endure more writes for the same number of files. Heavily filled disks can shorten the usable life of SSDs.

Greater chance of fragmentation:

Free space becomes severely fragmented when disks fill up. Excessive fragmentation leads to slower file reads/writes. Defragmenting helps coalesce free space but is less effective on nearly full volumes.

Higher disk failure risk:

Full hard drives put greater strain on disk mechanics like the head actuator. Accumulated heat and friction can lead to earlier mechanical failures. Keeping some breathing room – 25% free space or more – reduces wear and tear.

Difficulty updating files:

If files are highly fragmented with little free space, attempts to overwrite the contents in-place can fail. The new data won’t fit into the existing distribution of sectors. Updates may get aborted or require making a whole new copy.

Unable to create restore points:

Windows requires free space to create restore points and shadow copies. Running out of space prevents backups of earlier versions when files get saved or deleted. Losing these restore points makes it harder to recover from mistakes.

Best Practices to Reclaim Disk Space

Since sizable free space is ideal for stability and performance, reclaiming used space can be beneficial. Here are some best practices for freeing space on full disks:

Delete unneeded files:

The most obvious starting point – get rid of files and folders you no longer need. Sort folders by size to find good candidates for removal. But don’t delete critical system files that can cause problems.

Remove duplicate files:

Many systems accumulate copies of files in multiple folders. Delete extras copies to immediately recover space with no loss of data.

Clear temporary files:

Web browsers, apps, and media software often cache files temporarily. These can be safely deleted in most cases to recover space.

Compress rarely used data:

Compressing infrequently accessed data into a .zip archive can reduce its size with minimal inconvenience when you do need it.

Move data to alternate drives:

If you have data on multiple drives, redistributing the contents can free up space on nearly full volumes. Move data like photos, videos, and music files to underutilized drives.

Resize oversized page/swap files:

Check the size of the swap and page files. Reduce to a size proportionate to your RAM capacity to potentially recover GBs of disk space.

Upgrade to a larger drive:

When disk usage consistently meets or exceeds capacity, upgrading to a larger drive may be warranted. Cloning the old drive moves all data without reinstalling the OS.

Do a clean reinstall:

As a last resort, starting fresh by erasing the disk and reinstalling the OS can wipe out all fragmentation and temporary junk files.

Conclusion

The discrepancy between file sizes and disk usage arises from necessary overheads of storage systems. File system structures, clusters, and padding all contribute to the extra space consumed. While we can reduce some of the overhead, additional size on disk is inevitable. When managing disk space, it’s important to anticipate actual utilization exceeding sums of filed sizes. With planning and best practices, adequate free space can be maintained for performance and stability.