What is Cache Memory?

Cache memory is a small, high-speed storage area that is part of or close to the CPU in a computer system (Source: https://redisson.org/glossary/cache-memory.html). It acts as a buffer between the CPU and main memory, storing frequently used data and instructions for quicker access by the CPU. This allows the CPU to access data and instructions much faster than fetching from the main memory every time. There are different levels of cache memory (Source: https://www.devx.com/terms/cache-memory/):

- Level 1 (L1) cache – Located inside the CPU itself and has the fastest access.

- Level 2 (L2) cache – Located very close to the CPU on the motherboard.

- Level 3 (L3) cache – Larger and slower than L1 and L2 cache.

There are also other types of cache like CPU cache and disk cache. CPU cache stores instructions and data needed by the CPU while disk cache buffers frequently accessed disk data. Overall, cache memory helps improve CPU performance by reducing access times to important data and instructions.

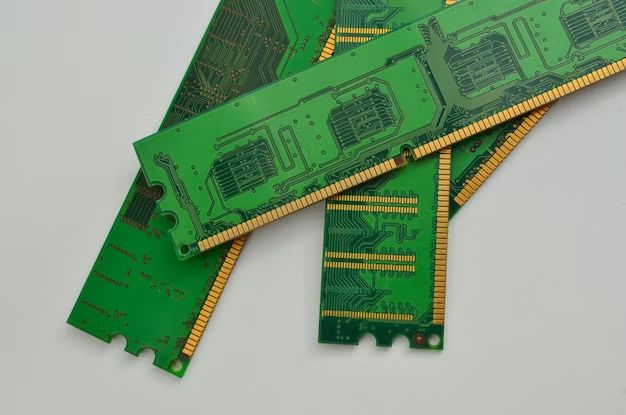

What is RAM?

RAM, which stands for Random Access Memory, is a type of computer data storage used to store data and programs needed by the CPU immediately during the computer’s operation [1]. RAM allows data to be accessed randomly, meaning any byte of memory can be accessed without touching the preceding bytes. This is in contrast to sequential memory devices like tapes, disks, and drums, which read and write data sequentially [2].

There are two main types of RAM used in computers:

- DRAM (Dynamic RAM) – Each bit is stored in a separate capacitor within an integrated circuit. The capacitors leak charge so the information eventually fades unless refreshed periodically.

- SRAM (Static RAM)- Uses 6 transistors to store each bit. SRAM retains its contents as long as power is connected and does not need to be refreshed like DRAM.

Other types of RAM include VRAM (Video RAM) used in graphics cards, and Cache which is used by the CPU to store frequently used data and instructions [3].

Differences Between Cache and RAM

Cache and RAM serve complementary purposes in computing, but there are some key differences between the two technologies:

Volatility: Data in RAM is lost when power is removed, whereas cache memory is often implemented with static RAM that retains data without power. However, cache is still considered volatile memory since data is temporary and frequently overwritten (Source).

Speed: Cache memory is faster than main system RAM, with access times in nanoseconds rather than milliseconds. The proximity of cache to the CPU reduces latency. RAM is still considerably faster than secondary storage like hard drives (Source).

Size: Cache sizes range from kilobytes up to tens of megabytes in consumer devices. System RAM is generally 4-64GB for modern computers. The small size of cache ensures frequently used data is readily accessible (Source).

Location: Cache memory is integrated directly onto the CPU chip or located very close to the processor. In contrast, RAM is on the motherboard located further from the CPU (Source).

Cost: Cache has a higher cost per megabyte compared to RAM. However, only small quantities are needed. The high speed and performance optimization of cache memory justifies the increased cost (Source).

Is Cache Considered a Type of RAM?

While cache uses RAM technology, it serves a distinct purpose so is not considered a type of RAM. Cache is a small amount of high-speed memory located on or close to the CPU that stores recently accessed data to speed up subsequent access requests. In contrast, RAM refers to the main system memory that temporarily stores data used by running applications and the operating system.

Though cache uses static RAM (SRAM) chips like regular RAM, it is architecturally and functionally different. Cache is dedicated to specific CPUs or cores and optimized for faster access. It is managed automatically by the CPU, not the operating system. Cache only stores selected data based on usage patterns, while RAM stores all currently used data.

So in summary, while cache leverages RAM technology, it serves as a type of buffer to accelerate access to the most actively used subset of data. Cache is not considered a form of primary system memory like RAM. It complements RAM to improve performance.

Advantages of Cache

One of the main benefits of cache memory is that it allows for much faster access to frequently used data. By storing recently accessed data and instructions in SRAM, the processor can access cache memory much more quickly compared to accessing main memory (DRAM). Main memory access typically takes about 60-100 nanoseconds, while cache memory access only takes about 10-15 nanoseconds (Source).

This faster access time significantly improves system performance by reducing the number of slow main memory accesses. When data is needed by the processor, it can be fetched from fast cache memory rather than making the processor wait on main memory. This helps avoid stalling and delays in instruction execution.

In addition, cache memory also helps minimize traffic over the system bus to main memory. With frequently used data stored locally in cache, there are fewer external memory accesses required. This reduces congestion on the data bus and further improves overall performance (Source).

Disadvantages of Cache

While cache memory provides significant performance benefits, it also comes with some downsides. Two key disadvantages of cache are that it takes up valuable space on the CPU chip and it can return stale data:

Cache memory is implemented directly on the CPU chip, so it takes up valuable real estate that could otherwise be used for additional processing cores or other components. The more cache capacity that is added, the larger the CPU chip needs to be, which increases costs. There is a trade-off between the performance boost provided by larger cache sizes and the expense of a larger CPU die size [1].

Additionally, because cache memory stores a copy of data from main memory, it can become inconsistent and return stale data that is not the most current version. When the data in main memory is modified, the copy in cache needs to be updated as well. If this synchronization does not happen immediately, the cache can provide outdated data that leads to errors. Cache coherency algorithms help mitigate this problem but cannot fully eliminate it [2].

When is Cache Used?

Cache memory stores recently accessed data to speed up subsequent requests for that same data (Source). The premise behind caching is that computer programs are likely to access the same data or instructions repeatedly over a short period of time. By keeping a copy of that data in temporary high-speed storage, the cache allows the CPU to access it more quickly, improving overall system performance.

When the CPU needs to read from or write to main memory, it first checks the cache. If the required data is in the cache (called a cache hit), the CPU immediately reads or writes the data in the cache instead of accessing the slower main memory. If the data is not in the cache (called a cache miss), the data must be fetched from main memory, which takes longer. The cached data is then copied into the cache for potential future access.

Cache memory is commonly used to store:

– Recently accessed disk blocks/sectors that are likely to be accessed again

– Values stored in CPU registers when a process is switched

– Network buffer data to reduce latency

– Frequently accessed program instructions

– Data related to the operating system, drivers, etc.

– Output data before transferring it to peripherals

By optimizing cache management and taking advantage of locality of reference, the use of cache memory substantially speeds up data access and improves overall system performance.

Cache Management

Cache management refers to the policies and techniques used to effectively utilize cache memory and improve overall system performance. One key aspect of cache management is replacement policies that determine which data gets evicted from the cache. The most common replacement policy is least recently used (LRU), which removes the data that hasn’t been accessed for the longest time. This is based on the principle of temporal locality – the idea that data that has been accessed recently is likely to be accessed again soon. Other policies include first in first out (FIFO), least frequently used (LFU), and random replacement. These each have various tradeoffs in terms of implementation complexity and hit rates (Tripathy, 2022).

In addition to replacement policies, cache management also involves write policies that control how data is handled when written to cache. The two main approaches are write-through and write-back. With write-through, data is synchronously written to both cache and main memory. This ensures data consistency but has high write latency. Write-back only writes data to cache and “backs” it up to main memory later. This has lower latency but risks data loss in case of a crash (IBM, 2022). Overall, carefully tailored cache management policies are crucial for optimizing performance.

Improving Cache Performance

There are a few key ways to improve the performance of cache memory in a computer system:

Increasing the cache size can significantly boost performance by reducing miss rates. Larger caches can store more data and instructions, meaning the processor finds what it needs in cache more often instead of waiting for main memory. However, larger caches also increase cost and take up more space on the CPU die [1].

Optimizing software code to maximize cache hits is another effective technique. Loop blocking, for example, restructures loops to reuse data in cache instead of constantly fetching new data from main memory. Aligning data structures to match cache line size also helps [2].

Hardware prefetching anticipates cache misses and fetches data ahead of time so it’s ready when the processor requests it. This helps hide the latency of fetching from main memory. Intelligent prefetch algorithms are key to maximizing performance gains from prefetching while minimizing wasted work [1].

Overall, carefully optimizing cache size, software code, and prefetching techniques can substantially boost system performance by improving hit rates and reducing miss penalty.

The Future of Cache

As processors continue to get faster, the speed gap between CPUs and main memory grows larger. This increases the need for faster caching technologies to bridge that performance gap. Some newer cache memory technologies that aim to improve speed and efficiency include:

Emerging memory technologies like eDRAM (embedded DRAM) combine the high density of DRAM with the low latency of SRAM. eDRAM acts as on-die L3 cache memory and provides much higher bandwidth compared to traditional SRAM-based caches.

Advancements in 3D die stacking allow for higher density caches by stacking cache directly on top of the CPU. This provides greater bandwidth with lower latency. Intel and AMD have already implemented this in their products.

Improvements in cache management through smarter caching algorithms and predictive caching techniques help optimize cache performance. Hardware and software optimizations like larger cache line sizes and prefetching aim to reduce cache misses.

While caches will continue to get faster, their fundamental purpose remains the same – to bridge the gap between the CPU and main memory access speeds. Emerging memory technologies combined with software optimizations will help caches become an even more crucial component of computer systems.